How to Do AI Filmmaking (Tutorial)

AI FILMMAKING IS HERE TO STAY…

And a quick look at recent work in the field shows exactly the kind of creative projects filmmakers are now empowered to create with these tools on their own terms.

AI filmmaking is currently in its ‘painters who don’t like that photography is now a thing’ phase, but if you never were, or you’re already past that, this mini-course is for you.

Right here on this page I’m going to give you a full breakdown of how we created this acclaimed AI trailer, and all of our best AI filmmaking secrets so you can start making your own killer AI projects.

Concept

Most people think AI filmmaking is just you clicking a button, and then a film comes out the other end.

Those people couldn’t be more wrong.

You’re going to need to put in all the effort you put into normal digital filmmaking.

The only difference is, you’ll be able to accomplish things that simply weren’t possible with tiny budgets, shorter timeframes, and smaller teams before AI.

That means putting work into your concept, which is why I developed a concept for a movie I would actually want to see.

So many people skimp on story, and you don’t want to do that.

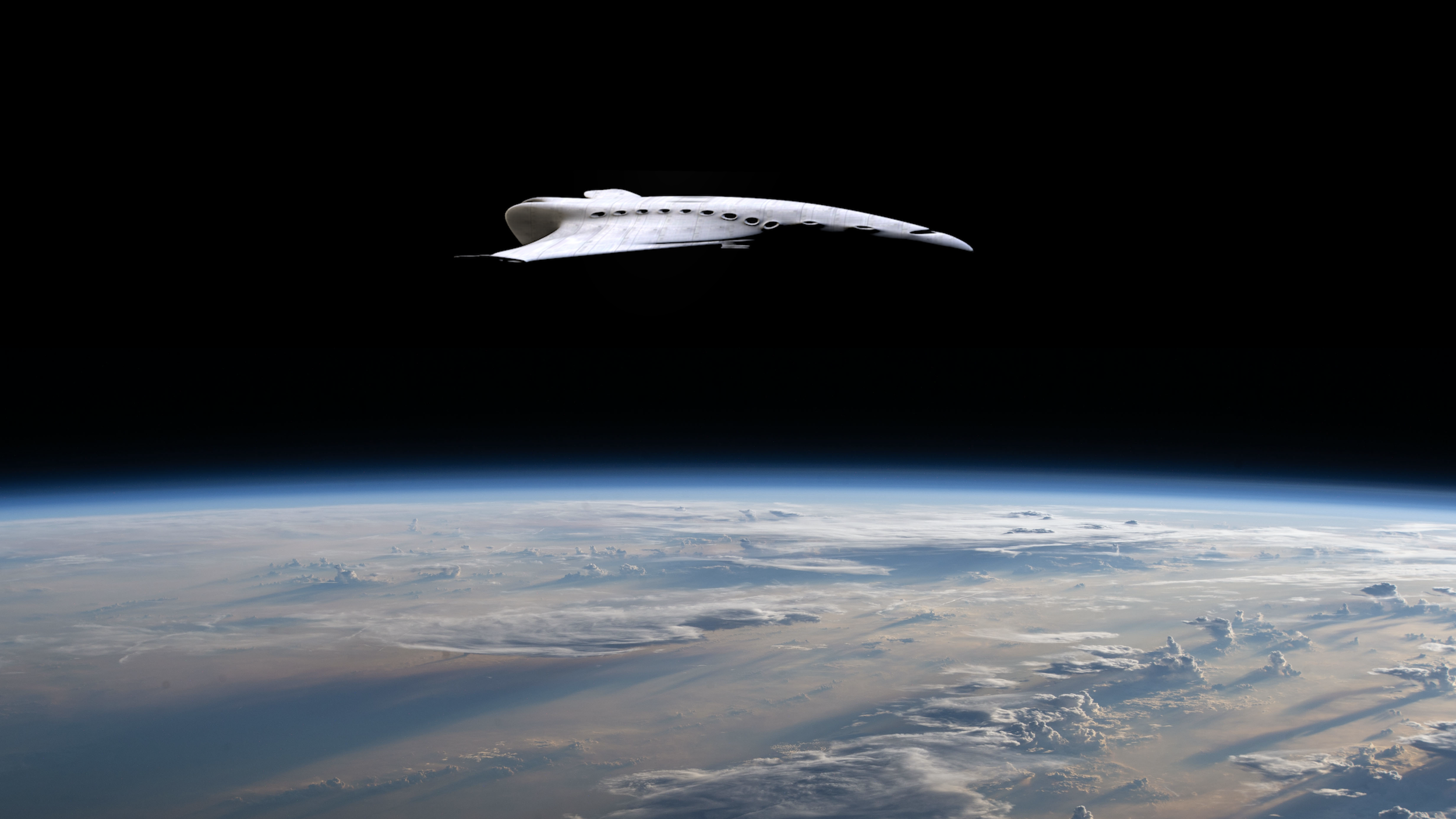

This project is called MANDELA.

It’s about a secretive group that utilizes a mysterious machine to alter our timeline, leaving only memories of how things were in its aftermath.

This film is ready to be turned into a feature tomorrow, if I wanted. You don’t have to go to those lengths, but the more you like your story, the more successful the project is likely to be.

Look Dev

The next key was developing the look.

Typically I have a vision for this about the same time I have the idea for the concept in general, but you can develop the look at any time in pre-production.

Midjourney is a great place to iterate. Or you can create a moodboard in ShotDeck, Pinterest, or your camera roll of the type of look you’re going for.

You can then utilize this information to inform your prompts when you start generating video.

You’ll be able to more easily prompt the video outputs you want if you clearly already know what you want to see.

Think about everything from the style, to the color palette, even down to the shooting style you want, like handheld, tripod, etc.

DIALOGUE

Based on the story I created, I drew up lines of dialogue that got across key points of the plot, without giving away too much.

To create the actual voices, I used Eleven Labs’ Speech-to-Speech feature.

Most people don’t know about this, but it allows you to deliver the lines any way you want them, even with badly recorded audio, and Eleven Labs will recreate your lines the same way, but with any voice you choose, in amazing quality.

This is a game-changer, and as of now, it’s the fastest, most effecient way to do AI dialogue.

Video Generation

I used Runway’s Gen-3 to create all of these video clips.

There’s two key ways to generate videos at the moment:

Text-to-Video

Image-to-Video

Text-to-Video allows you to type a prompt of what you’d like to see, and have a video clip generated based on that prompt.

This method is excellent because it’s efficient, and once you learn how to get the results inside a single platform, you’re off to the races.

Text-to-Video in Runway’s Gen-3 is the method I used to create the majority of the clips in this trailer.

Image-to-Video, as you likely guessed, lets you upload an image, and then the platform will create a video clip for you based on that (in addition to your text prompt if you want).

What’s great about Image-to-Video is that you can develop more precise looks in an AI image generator like Midjourney, to keep better consistency, including character consistency across your video generations later.

It’s also great because prompting this way typically allows you to use both an image and a text prompt in combination, to guide your video generation.

Editing

Once you have all your videos generated, you want to move on to editing. For trailers, our favorite method is Music-Based Editing.

Music-Based Editing is where you design the entirety of your trailer around a music track or tracks.

Sometimes this is a traditional song, often redone in a more cinematic way to match the vibe of the film. Sometimes it’s a track made up entirely of cinematic sound design to create the musical structure of the trailer, without being an actual song. For MANDELA I used the latter.

I used sound design from a myriad of places, including our SINGULARITY Sound Effects Library, as well as music tracks from our OCTIVE Cinematic Music packs.

There are countless other tools we use, from PixelCut for upscaling image generations and removing backgrounds, to Photoshop’s Generative Fill for changing elements in scenes.

You can see a full list of the best AI filmmaking tools here:

The Wave

Hopefully this has more than you need to know to get started making your own great AI films and videos.

This tech is still in its infancy, and it’s only going to get more incredible from here, but now is the perfect time to start learning, so that as these tools mature, you can take full advantage of them to make your projects a reality.

Remember, the AI wave is here to stay, so now’s as good a time as any to learn to surf.